Propagation Error: Age Assurance Meets the Online Safety Act

When age checks run into roadblocks, what do experts say went wrong? Are they right?

As the UK’s Online Safety Act came into effect last week, the result was predictably chaotic: rushed implementations, spoofable rollouts (including Discord and Reddit being tricked by Death Stranding’s character Sam Porter Bridges), and a flood of public scrutiny.

At Vys, we’ve worked in this space for years, partnering with clients to design privacy-preserving age assurance deployments that work, and publishing a practical deployment handbook to provide a self-serve resource to internal teams. We didn’t expect validation of our approach to come so quickly, but the past few weeks have shown how fragile weak age assurance strategies can be.

Whether you are just beginning to wrap your head around age assurance or have been grappling with it at your platform for a while, the overwhelming number of articles and commentary these last few weeks might still be too much to digest. In this issue, we break down the most critical developments from the last two weeks and offer some measured critiques of the loudest arguments.

Developments

Back in January 2025, we predicted that this year, companies would need to “design and implement a privacy-preserving age verification strategy that can flexibly adapt and modulate to different regional legal requirements, to avoid being shut out of key markets.” As the UK Online Safety Act came into force last week, we saw this prediction validated. The Act requires that “tech firms must introduce age checks to prevent children from accessing porn, self-harm, suicide and eating disorder content.”

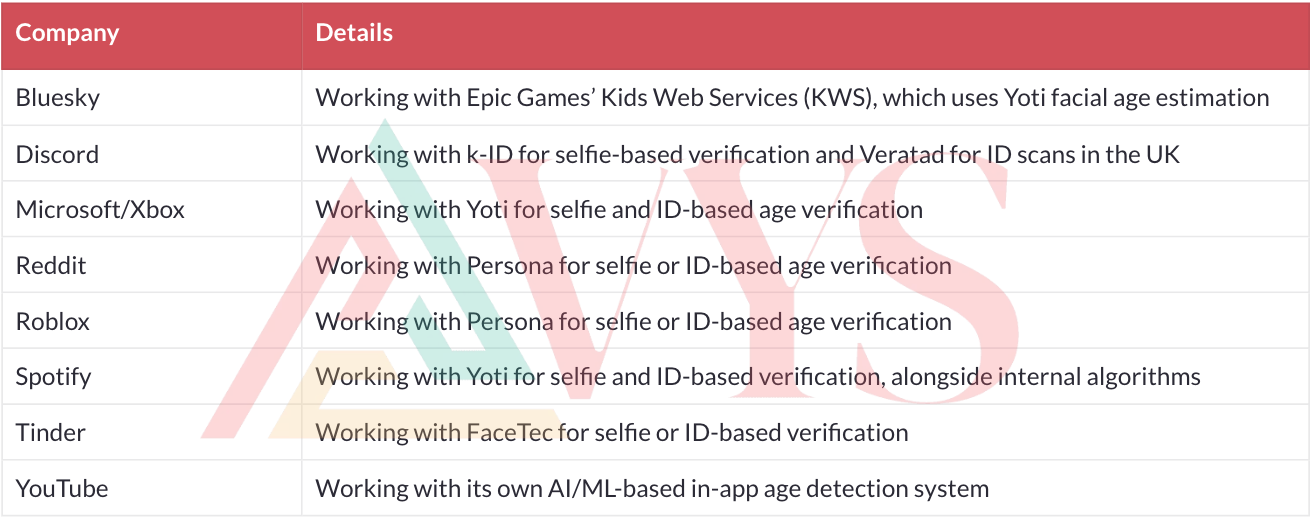

While adult sites predictably implemented age checks, several non-adult platforms also acted:

The consequences and outcries came swiftly:

Discord and Reddit’s age assurance strategies were easily spoofed: Days after their age assurance strategies were deployed, Reddit and Discord users discovered ways to circumvent the systems using AI-generated and in-game character images. Tom Warren at The Verge reported that Reddit and Discord users successfully fooled the platforms’ selfie-based check by submitting a photo of a character from the video game Death Stranding.

Overbroad deployments of age assurance sparked significant backlash:

Reddit age-gated “sensitive” subreddits like r/IsraelCrimes or r/UkraineWarFootage

Spotify threatened to delete accounts that failed its age verification test

X age-gated a post from UK MP Grace Lewis announcing her resignation from the Labour Party.

The Trump administration, Elon Musk, and Taylor Lorenz quickly called it a censorship law:

U.S. Representative Jim Jordan, a close ally of Donald Trump, called the Act the “UK censorship law” in remarks ahead of his meeting with UK Technology Secretary Peter Kyle. Jordan raised concerns about the law’s implications for free speech and internet freedom, echoing criticisms from other conservative U.S. lawmakers.

Elon Musk / X claimed that the Act poses a "serious threat" to free speech, arguing its enforcement amounts to overreach and forced censorship. Musk called it "suppression of the people" and promoted petitions to repeal the legislation, warning that double compliance burdens could stifle platforms.

Taylor Lorenz said that the UK is censoring the internet, calling on American consumers and companies to “fight back to protect free speech online ASAP”. She flagged that soon after the Act went into force, “platforms began classifying nearly all breaking news footage, war coverage, investigative journalism, political protest material and information about reproductive and public health as “explicit” or “harmful" content”.

VPN usage (predictably) spiked: VPN usage in the UK surged by over 50%, according to multiple VPN providers cited by Wired reporters Lily Hay Newman and Matt Burgess. Popular VPN services like Proton and NordVPN reported “huge” spikes in downloads, with UK-based traffic jumping well above normal levels. Many users cited concerns about privacy and government overreach as their reason for circumventing age verification systems.

The loudest arguments we read

Regardless of your position on age assurance, this rollout offered plenty of ammunition.

For many, implementation of the Act was a case study in poor, overbroad regulation with no understanding of how technology works. Emma Roth (The Verge) argued that the internet was “not ready” for age assurance and that privacy risks were mounting. Intelligencer (NYMag) warned that ID-based access could become the norm, fundamentally turning the open web into an experience where revealing identity becomes mandatory before participation. Even the Financial Times (FT) editorial board lamented that VPNs were punching holes in the noble goal of protecting children online.

Yet supporters of the Act argued that the problem wasn’t the Act, it was shoddy implementation by platforms. Parmy Olson in Bloomberg UK called out platforms like Pornhub and Discord, noting that they needed to do more due diligence or build their own solutions instead of relying on last-minute fixes (her words, not ours). George Billinge in the Guardian highlighted that the Act is designed to be risk-based and flexible, not overly prescriptive. More granular guidance, he argued, would have made it outdated faster.

Finally, the hopeful among us started to look ahead to more privacy-preserving approaches in the future. Jacob Ridley (PCGamer) argued that to avoid compromising user privacy, zero-knowledge proofs (ZKPs) could be a promising alternative to verify age without disclosing identity; limiting surveillance and reducing breach risks. However, Alexis Hancock and Paige Collings (EFF) contended that ZKPs do nothing to prevent verifier abuse or collection of metadata, and cannot address privacy risks from broader data collection systems.

So who’s responsible?

Over a year ago, we made the case in this newsletter that a responsible approach to age verification would require industry, policymakers, and civil society to collaboratively define what a responsible, privacy-preserving approach could look like.

Unfortunately, that did not happen as quickly or extensively as we needed. Instead, an absence of ecosystem trust resulted in these different factions largely retreating into their established positions. Decisions to deploy became a show of force by either platform or policymaker, not an honest discussion about the tradeoffs we must consider.

In this context, some of the platforms’ most controversial decisions are entirely understandable. Ofcom’s Codes don’t just target extreme content; they require that children be protected from “dangerous stunts or challenges, misogynistic, violent, hateful or abusive material, and online bullying”.

That is incredibly broad, as any beleaguered trust & safety analyst at a platform will tell you. A tongue-in-cheek song title or an emotional post during wartime could easily be interpreted as misogynistic or hateful, subjecting platforms to potential penalties. Risk-averse platforms will err on the side of caution and block content entirely. If Ofcom had also provided more guidance on the type of content that children should be able to access, we might have avoided some of these kinks in deployment.

On the technical side, most platforms weren’t well-positioned to operationalize these policies. It’s especially difficult if your tech stack at all levels does not have the ability to

Translate policy language into automated detection classifiers that can at high precision distinguish between violating and non-violating content

Offer a nuanced range of age-appropriate mitigations e.g. blocking a child from an entire forum instead of specific posts in the forum

Deploy age checks at the right moment in the user journey

Similarly, some of the more egregious examples of spoofing age checks suggest a significant challenge with internal deployment strategies. There was a notable difference in deployment success between the companies that adopted a thoughtful, data-driven vetting and testing process, and the ones that did not gain cross-functional alignment early enough. Developing a thoughtful end-to-end age assurance strategy requires platforms to internally align on important technical, legal, and regulatory questions, well before they decide to engage a vendor or to build a solution in-house.

Finally, when it comes to future innovation, it’s worth defending what works. EFF chose to undermine zero-knowledge proofs, a rare example of privacy-preserving innovation in the age assurance space. In one odd example, the writers expected ZKPs to prevent platforms from collecting things like IP addresses or tracking browser behavior - broader internet infrastructure issues that predate and operate entirely outside ZKP technology. ZKPs are a tool for minimizing data disclosure, not for enforcing policy boundaries on platforms themselves. Instead of dismissing ZKPs, we should appreciate the technological innovation, and develop stronger guardrails around how they’re implemented and who gets to use them.

Is age verification dead?

That’s unlikely. Ahead of the rollout of the Act, I spoke to CNET about how “state laws, advocacy campaigns, and growing parental demand in the US are all converging around the need for age assurance… combine that with rapid advances in the tech ecosystem, and it's no longer a question of if the US adopts age verification, but how and when”. It’s therefore crucial that we try and avoid some of our earlier mistakes around engagement, and

Robustly stress test age assurance techniques (see some of the great work already done in this space by National Institute of Standards and Technology (NIST) in the US)

Define responsible implementation practices within organizations so that user privacy can be protected and friction can be minimized

Get legal, product, and engineering teams aligned early and often across the difficult questions that have to be answered for a successful deployment.

The pressure is ramping up, but the opportunity to get it right is still on the table.